10 minute read

In a recent New York Times lament, former Twitter executive Yoel Roth sought to center himself as the personal, beleaguered victim of online aggression, after Elon Musk bought the company and showed him the building’s exit. “This isn’t a story I relish revisiting,” Roth wrote, before launching into a long, tendentious revisiting of his time at Twitter, while neatly cleaving out evidence made public in Twitter Files reporting that he helped to censor Americans.

But as often happens when people try to reformulate their past to create a more compelling personal narrative, Roth accidentally spilled a few truths, one being that academics are now a target—they are, but not for the false reasons he argues. First here’s Roth in the New York Times:

Academia has become the latest target of these campaigns to undermine online safety efforts. Researchers working to understand and address the spread of online misinformation have increasingly become subjects of partisan attacks; the universities they’re affiliated with have become embroiled in lawsuits, burdensome public record requests and congressional proceedings. Facing seven-figure legal bills, even some of the largest and best-funded university labs have said they may have to abandon ship. Others targeted have elected to change their research focus based on the volume of harassment.

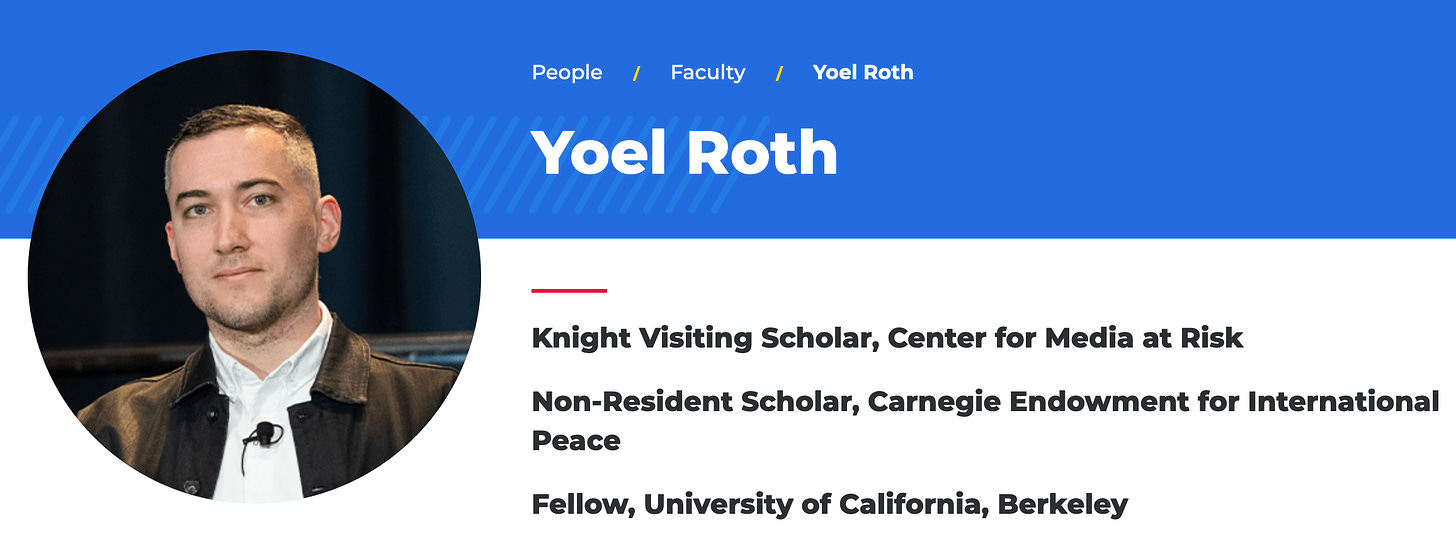

Like much of the essay, Roth’s complaint that academics are a target reads a bit self-serving since university professors are now his colleagues. Four months after he fled Twitter, the University of California-Berkely took Roth in, providing him a job and status as a policy expert, and allowing Roth to recast himself from corporate censor to academic folk hero fighting online hate. He landed a second academic position at the University of Pennsylvania, where he is titled a “visiting scholar.”

But Roth’s journey from Twitter to academia was almost preordained. Social media employees who judge “misinformation” and rule what should be banned, work hand in glove with academics—a clerisy that maintains a god-like ability to sniff out misinformation and hate—as can be seen from Roth’s own emails.

In company emails, Twitter officials dismissed DiResta as a hobbyist in 2017, and Roth later strategized with Twitter to steer one of their own employees away from her and back to “safer territory.” Roth also seemed to dismiss the work done by New Knowledge, a company where DiResta worked against disinformation before getting caught in a scandal fomenting disinformation. But after Stanford sprinkled DiResta with scholarly holy water in 2019, giving her a job and Stanford email, Roth altered course, later praising DiResta as “trusted on our side” and recommending her to a reporter at NBC, and giving talks to her class at Stanford.

These Twitter Files show that academic researchers remain critical to social media officials looking for university brands to shore up their own industry’s nebulous definition for disinformation and vague claims for what should be censored. So, of course academics are now the target of free speech advocates and those frightened by the increased trend in censorship.

Let’s look at Yoel Roth’s emails to see more.

Back in November 2017, New York Times technology reporter Sheera Frenkel contacted Twitter about profiles she was writing on several independent researchers who worked on disinformation, including Renee DiResta. “Have any of the researchers been brought in, or has Twitter otherwise incorporated their methods to better understand disinformation on the platform?” Frenkel asked the company, fishing for comments.

When Frenkel’s request was circulated, Twitter’s experts were less than thrilled about DiResta and the others being profiled in the New York Times as experts.

“Misinformation is becoming a cottage industry,” one Twitter official responded, regarding Frenkel’s request for comment. Another Twitter official then emailed a somewhat harsh viewpoint:

Not sure how you want to do it, but pointing out that the word “researcher” has taken on a very broad meaning—Renee is literally doing this as a hobby and is not part of any academic institution, (perhaps you could ask the journo which institution she is from).

He then offered a less severe, more Times-friendly quote:

Perhaps the simplest way to phrase it is, “There are a large number of people publishing papers, reports, blogs and tweeting about these issues. While we read many of them, we prioritise outreach to those authors publishing peer reviewed work and those who are part of academic institutions or specialist think tanks.”

He ended by offering a name that the reporter could contact “to go on the record about lazy methodology.”

I could not find the response Twitter emailed the NY Times’ Sheera Frenkel, but a week later Frenkel published a glowing profile of DiResta. In a melodramatic opening scene that seems ripped from bad daytime television, readers find DiResta dressed in pajamas and sitting in her bed, fighting a lonely online war against disinformation while struggling to stay quiet so she doesn’t wake her sleeping children.

For years, Ms. DiResta had battled disinformation campaigns, cataloging data on how malicious actors spread fake narratives online. That morning, wearing headphones so she wouldn’t wake up her two sleeping children, Ms. DiResta watched on her laptop screen as lawyers representing Facebook, Google and Twitter spoke at congressional hearings that focused on the role social media played in a Russian disinformation campaign ahead of the 2016 election.

None of the caution Twitter discussed regarding DiResta appears in Frenkel’s article, nor in a book Frenkel later co-authored titled, “An Ugly Truth: Inside Facebook’s Battle for Domination.”

To better understand the close ties between technology reporters and the subjects they cover, see these previous Twitter File articles:

TWITTER FILES: Internal Company Emails Expose Why Privileged Reporters Likely Hate Elon Musk and Twitter 2.0: Musk denied special access to Twitter’s “trusted reporters,” and allowed outside journalists entrée for a more independent examination.

TWITTER FILES: Twitter Provided Privileged Access to Banning Queen, Taylor Lorenz: Twitter engineer assisting me laughed, “Wow! She’s a heavy user.”

Months later in February 2018, DiResta makes another appearance in Twitter’s emails after she joined New Knowledge, one of the many companies popping up at the time with venture capital funding to help corporate American battle disinformation. New Knowledge was founded by Jonathan Morgan, one of the researchers Sheera Frenkel had previously asked Twitter for comment about, along with DiResta.

When Frenkel contacted them, one Twitter official gave Jonathan Morgan a back handed compliment before dismissing him: “Jonathan is the most credible but his data work seems to be as a journalist and the bulk of his work is medium blogs and not peer reviewed.”

In the February 2018 emails, Twitter employees discussed New Knowledge and how to handle a report forwarded to them alleging a malicious Twitter campaign targeting the Disney superhero film “Black Panther” with fake news.

Yoel Roth emailed that two of the accounts tweeting fake news about the film had already been suspended, and he suspended a third for a ban evasion.

“More generally, have you responded to Rachele at new Knowledge on the basis of this report,” Roth emailed. Roth added that New Knowledge’s report “could create substantial risk” as it made allegations that were unconfirmed. He then suggested that Twitter not respond to New Knowledge and follow up directly with Disney, as New Knowledge copied them in on the emailed report.

When New Knowledge’s Jonathan Morgan and Renee DiResta then sent Twitter a proposal and other materials a few months later, Twitter promised to get back to them.

“Thank you Jonathan for the note and case studies. We’ll review internally and get back to you soon.”

But in follow up emails a few weeks later, Twitter did not seem interested, as New Knowledge was not offering them anything unique. Writing to Roth, one Twitter employee emailed, “My thought on this is we should pause this since we are likely to get something similar from FirstDraft.”

Roth made clear his concerns about working with DiResta that September 2018 when he began strategizing on how to direct another Twitter employee away from her. “FYI, this is about working with Renee DiResta,” Roth wrote, forwarding an email from one employee wanting to discuss working with outside groups and scouting them for potential hires. “We need to tread carefully and steer Jasper to safer territory,” Roth added. “I tried but he’s pushing it pretty hard.”

“Spoke to him at tea time,” another Twitter official responded. “So I think we can make good progress and manage the [Renee DiResta] risk.”

New Knowledge imploded a few months later.

The Senate Intelligence Committee had been holding a series of hearings in late 2018 on Russian interference in the 2016 election and published outside reports on social media tactics used by Russia’s Internet Research Agency (IRA). At the request of the Senate, several researchers, as well as Renee DiResta and other New Knowledge employees had written a 99-page report titled “The Tactics & Tropes of the Internet Research Agency.”

“We hope that this analysis of the IRA information warfare arsenal – particularly the discussion of the influence operation tactics – helps policymakers and American citizens alike to understand the sophistication of the adversary,” the report states, “And to be aware of the ongoing threat to American democracy.”

The following day the New York Times published an investigation detailing another threat to American democracy: New Knowledge. Jonathan Morgan had been caught along with other political operatives in a secret project that used Russian tactics to influence a Alabama Senate race in favor of the Democrat.

An internal report on the Alabama effort, obtained by The New York Times, says explicitly that it “experimented with many of the tactics now understood to have influenced the 2016 elections.”

The project’s operators created a Facebook page on which they posed as conservative Alabamians, using it to try to divide Republicans and even to endorse a write-in candidate to draw votes from Mr. Moore. It involved a scheme to link the Moore campaign to thousands of Russian accounts that suddenly began following the Republican candidate on Twitter, a development that drew national media attention.

“We orchestrated an elaborate ‘false flag’ operation that planted the idea that the Moore campaign was amplified on social media by a Russian botnet,” the report says.

The story noted that Morgan reached out at the time to DiResta, who told the Times that Morgan asked her for suggestions of online tactics worth testing. “My understanding was that they were going to investigate to what extent they could grow audiences for Facebook pages using sensational news,” DiResta said.

In early 2019, DiResta left New Knowledge, which later changed its name to Yonder. That summer, she joined Stanford University, and along with Alex Stamos, launched the Internet Observatory with funding from Craigslist founder Craig Newmark, one of the major donors in the “disinformation” space. Discussing the new group, Twitter officials agreed to watch it, but did not seem impressed.

“A recent Wired article had more details,” one employee emailed Roth and others, sending a link to the Wired piece on DiResta’s new Stanford project. “I haven’t clicked since Wired has a limit on articles and this isn’t important enough.”

DiResta later sent an email to Twitter, with a subject line announcing her important new Stanford gig: Hello from Stanford Internet Observatory 🙂

DiResta explained that the Internet Observatory could be helpful to Twitter’s research efforts. “Up for a quick call or coffee at Twitter HQ sometime in the next week or two?”

With DiResta now baptized by Stanford and converted from hobbyist to academic scholar, Roth apparently no longer felt the “need to tread carefully” and steer away from her “to safer territory.” After asking DiResta to send in some dates to get her on Twitter’s calendar, Roth wrote that he was working with her Stanford boss Alex Stamos to plan collaborations. “Nothing especially formal needed—just maybe a sketch of what your ideal [version one] of a collaboration could look like.”

That fall, DiResta sent Roth a one-pager laying out a possible collaboration with Twitter. By the Spring of 2020, Roth sent Stamos and DiResta an invite to collaborate on Coronavirus research, asking them to please refrain from sharing the email. “We’re reaching out to select researchers that we believe may be in the best position to effectively use the vast scale of this data.” Roth added that Twitter was not “currently in a position to partner or closely collaborate with you beyond providing access to the data….”

Roth disclosed his true feelings, in a later email to Stamos and DiResta, “And now a follow-up beyond the boilerplate our comms people asked me to send: We would LOVE to partner closely on anything in the IO universe that you find using this data.”

A few months later, a video producer with CNBC contacted Twitter to interview someone about COVID-19 and misinformation. “I’d love to include someone from Twitter!” he wrote. “I’d love to include a voice from the company who could talk about how it deals with misinformation in general and any policies that may be in place around it.”

“Would it make sense to connect CNBC with Graphika,” a Twitter comms employee emailed Roth, citing the firm Time Magazine recently profiled as one of the most important companies in combating misinformation.

But Roth seemed a bit wary of Graphika and recommended three academics including DiResta. “I’d maybe suggest folks like Kate Starbird (University of Washington) Claire Wardle (First Draft), or Renee DiResta (Stanford)—all of whom are equally trusted on our side, and can likely be useful voices.”

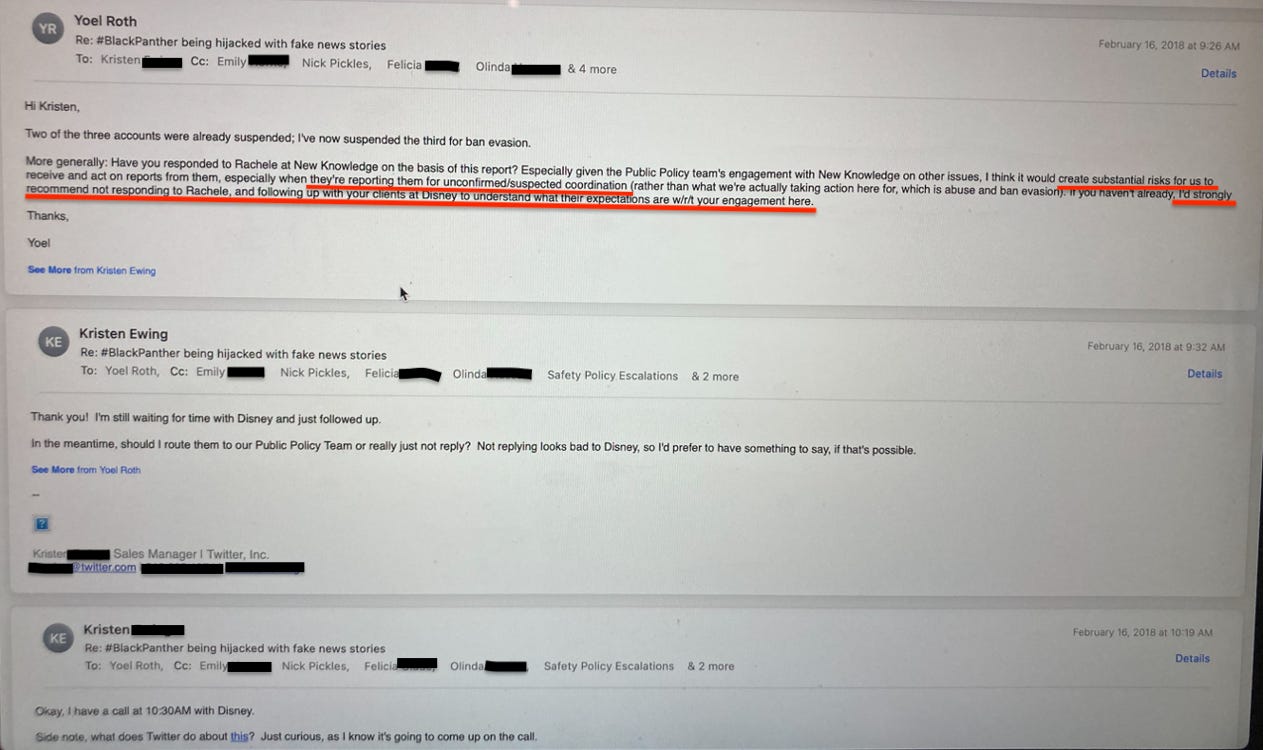

Over the succeeding two years, Twitter continued working with DiResta and her Stanford colleagues. In fact, DiResta’s Stanford Internet Observatory invited Roth in 2021 to speak to their class, along with Olga Belogova, who then handled censorship matters at Facebook.

“Thanks so much!” Yoel replied by email. “I’m happy to join.”

Like Roth, Belogova has joined academia and currently teaches a course on disinformation at the Alperovitch Institute for Cybersecurity Studies at Johns Hopkins SAIS.

Twitter and the Stanford Internet Observatory even shared interns.

“Any chance you know anyone who might be interested in a 3-month, well-paid gig on my team,” Roth emailed DiResta and other Stanford researchers in April 2021. “We’d be embedding the person in this internship.”

“We have a couple of mailing list that should have interested students,” Stanford’s Stamos responded.

“Thank you!” Roth replied.

But Twitter began going through dramatic changes when Elon Musk bought it in October 2022, only weeks before the midterm elections.

On November 1, 2022, DiResta and her Stanford colleagues released an analysis of data Twitter had provided involving inauthentic networks with technical links to China and Iran that had tweeted about America’s midterm elections.

Having once guided Twitter away from DiResta “to safer territory” before promoting her to CNBC once Stanford blessed her—“trusted on our side”—Roth now treated DiResta as an equal, giving talks to her Stanford class and reaching out to find interns.

Working closely together as colleagues, Roth now deemed Stanford’s DiResta an “independent” academic.

POSTSCRIPT: Bloomberg just published an article detailing the multiple research scandals plaguing Stanford, with one former professor describing at “pixie dust” the magical powers the university wields in Silicon Valley. The National Science Foundation awarded $3 million in 2021 to the Stanford Internet Observatory to collaborate with the University of Washington and explore new areas of study in the mis- and disinformation field.

Total federal dollars funding “disinformation research” remain unknown, but the National Institutes of Health recently paused a $154 million research program after courts began finding agencies at fault for censoring Americans. In this light, what is the public interest in pouring public dollars into universities for research that teaches governments how to censor the public?

More importantly, when does the Stanford Internet Observatory add to the growing list of Stanford research scandals that must be buried to protect the shine for university donors?