[Newscaster]: We’re interrupting your Thanksgiving to bring you this newsletter.

Yesterday at The informationin the ongoing drama surrounding OpenAI:

Later on Reuters, more developments:

The technique in question appears to be something OpenAI is calling both ominously, like Q*, pronounced “Q-Star.”

Get it? The perfect meal for anyone who wants to freak out about the Death Star killing us all. (Actually, though, it seems to be named in reference to a clever but not particularly nefarious AI technique known as A* that’s often used to power character movements in video games..)

The board may have been genuinely concerned about the new techniques. (It’s also likely that they had other things on their minds; according to the same story in Reutersthe Q* handle is supposed to be “[just] one factor in a longer list of board grievances that led to Altman’s firing, among which was concern about marketing advances before understanding the consequences.”)

§

me? I’m pretty skeptical; I was just kidding when I wrote this:

§

Part of the joke is that it doesn’t in reality I think Bing is already fueled by the supposed advance. A single Bing error a few months after this putative breakthrough won’t tell us much; even if it were real, putting Q* (or any other algorithm) into production that fast would be extraordinary. Although there is talk of OpenAI already trying to test the new technique, it would be unrealistic for them to completely change the world in a matter of months. We can’t really take advantage of Bing 2023 telling us anything about what Q* might do in 2024 or 2025.

But then again, I’ve seen this movie before, often.

OpenAI I could in fact they have a breakthrough that fundamentally changes the world.

But the “breakthroughs” rarely become general enough to meet rosy initial expectations. Often, breakthroughs work in some contexts, not others. Certainly every the putative breakthrough in driverless cars has been of this type; someone finds something new, looks good at first, maybe even helps a little, but at the end of the day, “autonomous” cars are still not reliable enough for prime time; no progress has crossed our threshold. AVs aren’t yet mainstream enough that you can drop a car that was tuned in pilot studies in Menlo Park, SF, and Arizona and expect it to drive gracefully and safely in Sicily or Mumbai. We’re probably still many “steps” away from true Level 5 driverless cars.

§

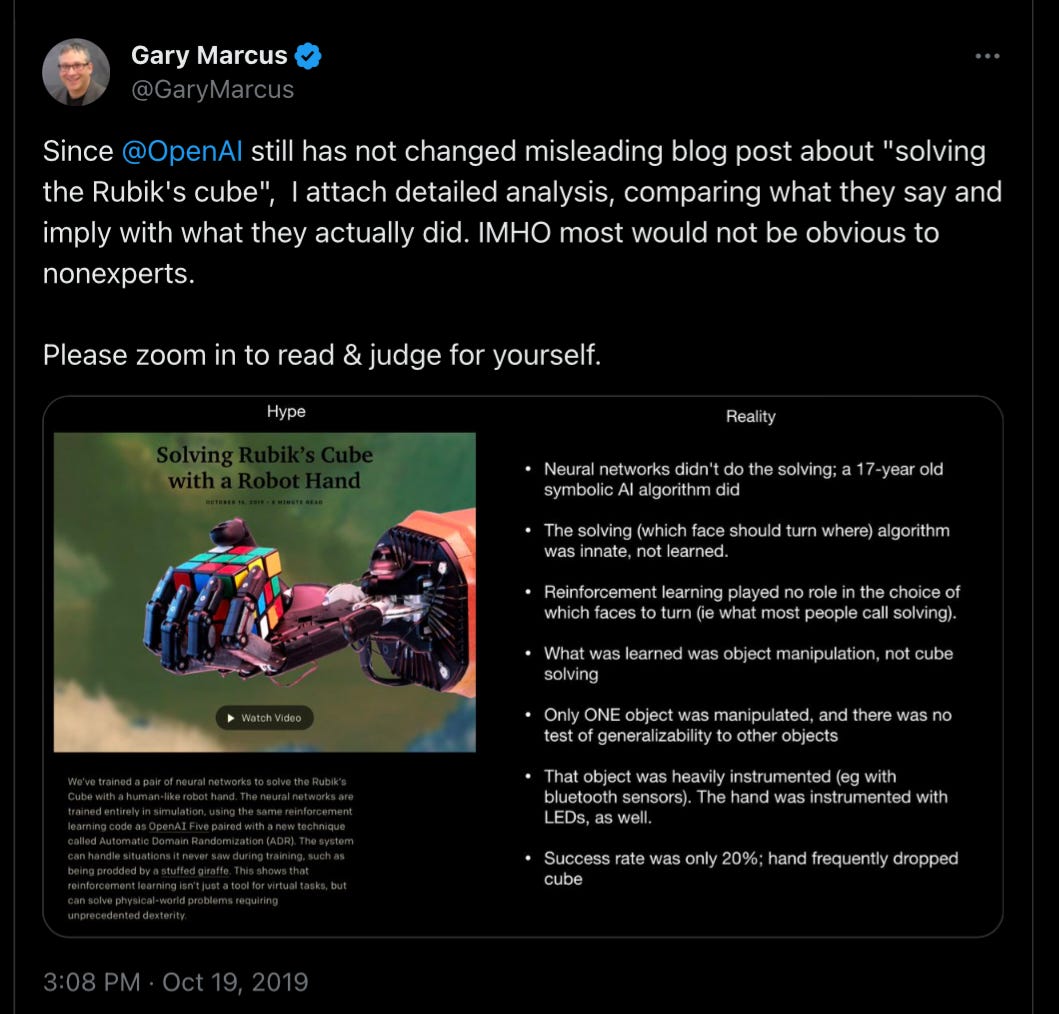

Or consider what OpenAI touted as an extraordinary breakthrough in 2019, when they released a video and blog post about how they got a robot to solve a Rubik’s Cube:

For many, the result sounded surprising. VentureBeat credulously reported on OpenAI’s wholesale PR offering; “OpenAI, the San Francisco-based AI research company co-founded by Elon Musk and others, backed by such luminaries as LinkedIn co-founder Reid Hoffman and former Y Combinator president Sam Altman, says it is on the verge of solving a grand challenge in robotics and AI systems.”

Me being me, I screamed bullshit, bashing OpenAI on Twitter a few days later:

Probably the most relevant point on the right (at least for present purposes) was that of generalization; getting their algorithm to work for an object (which, cheekily, turned out to be a special cube equipped with sensors and LEDs, as opposed to a Rubik’s cube you’d buy in a store) under carefully controlled laboratory circumstances is almost impossible guarantee that the solution worked. more widely in the complex real and open world.

Despite my concerns, OpenAI got a lot of press, and maybe some investment or recruitment or both outside of the press release. It looked good at the time.

But you know what? It didn’t go anywhere. A year or two later, they quietly shut down the robotics division.

§

What struck me most about Reuter’s piece was the wild extrapolation at the end of this passage:

If I had a nickel for every extrapolation like that…today, it works for elementary students! next year it will take over the world!— He would be rich at Musk’s level.

§

All that being said, I am a scientist. The fact that past performance has been greatly exaggerated does not guarantee that all future developments cannot occur. Sometimes you have nonsense about superconductors at room temperature (such as narratives always play well at first), and some things actually work.

Everything is still an empirical matter; I certainly don’t know enough about the details of Q* yet to judge for sure.

Time will tell.

But as for me, well… I have 99 issues to worry about (mainly the potential collapse of the EU AI Act and the likely effects of AI-generated disinformation on the 2024 election ).

At least so far, Q* is not one.

Gary Marcus it’s been resisting the hype for three decades; he has only very rarely been wrong.